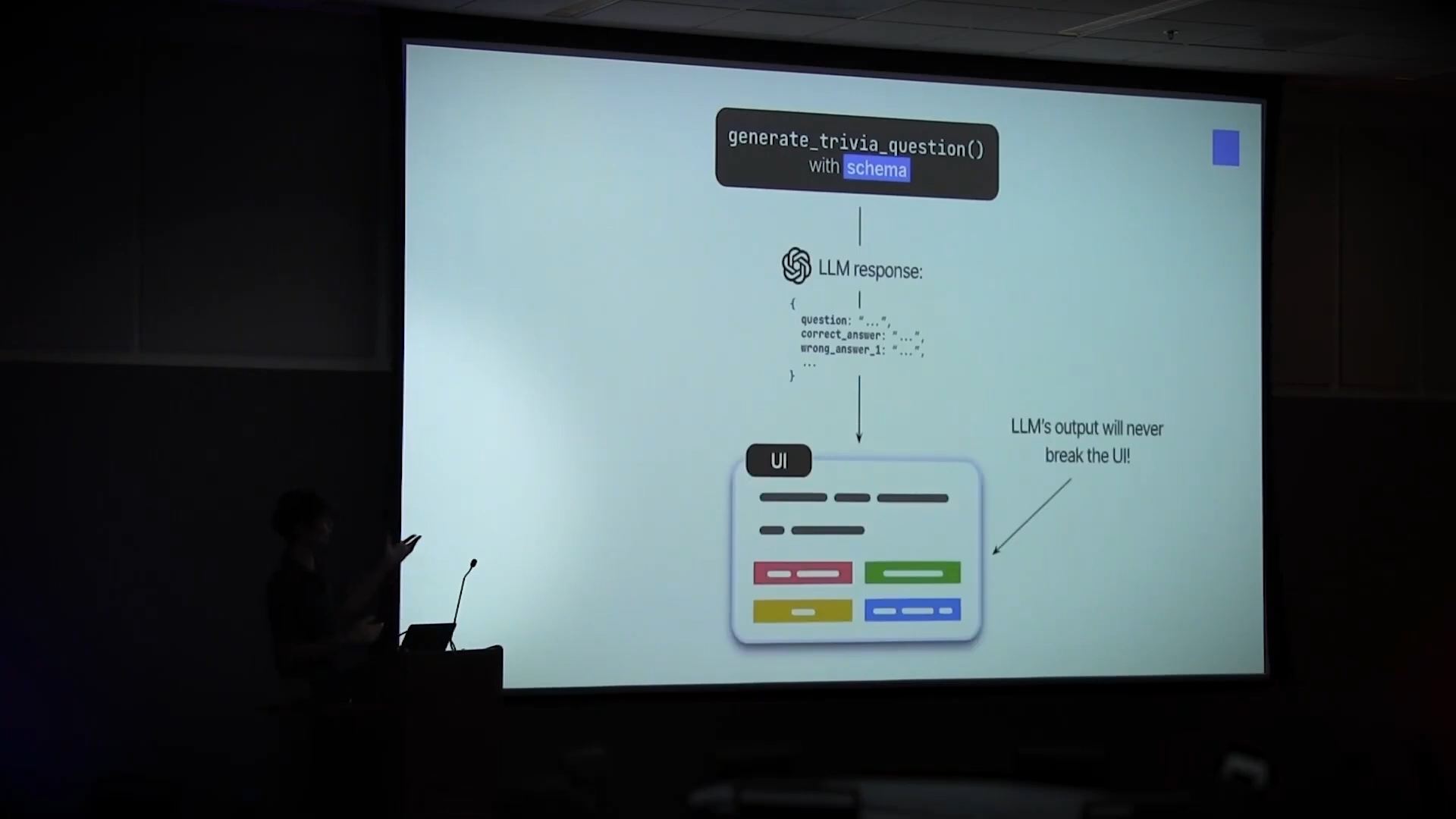

Taming LLMs with Structured Outputs

At DevIgnition 2024, I gave a talk on using structured outputs to get better responses from large language models (LLMs).

I was particularly enthusiastic about this topic, as I've been working with LLMs for a while now, and I've found that structured outputs can be a game-changer when integrating LLMs into your software.

You can watch a recording of the talk here:

If you'd prefer to just skim through the slides instead, you can do that as well:

This was a big personal accomplishment for me, as this was my first time giving a technical presentation of this kind, especially on this scale.

I hope you find the talk informative!

If you liked this post, feel free to check out azigy, the app I'm currently working on. You can also take a look at "The Art of Casually Building", the talk I gave a week prior to this one at Startup Shell, the University of Maryland's flagship startup incubator!